A Tale of Two Top-Downs: Part II

Coarse-to-fine Processes

In Part I, I discussed the fact that (at least) two kinds of distinct processes get labeled as “top-down”. The first, blueprint processes cannot produce nor grapple with complexity. The second, presented here in Part II, are consistent with the generation of complexity. These are coarse-to-fine processes.

Coarse-to-fine

Real-world complex systems typically have multiple scales of structure and behavior. That is, they have coarse aspects (think your skeleton and muscles which operate on a scale not too much smaller than your whole body, many pieces acting in tight coordination), and fine ones (think immune system function where very small things serve to do things like detect other small things like viruses and bacteria). Coarse things are what you can still see if you “blur the picture”, whereas the fine-grained details will be lost.

One way to generate a system that has multiple scales is to start with the coarse aspects and then progressively work into finer and finer details. Unlike a blueprint process, the details don’t need to be pre-specified. Instead, the coarse features which are generated first serve as constraints and context for processes that occur at finer-scaled to fill-in the details.

This is in fact one way to generate certain kinds of fractal shapes: begin with a coarse geometrical figure, and iterate a transformation that adds finer and finer details. The iteration can continue indefinitely, which is why one can “zoom in” on these fractals and always find more detail.

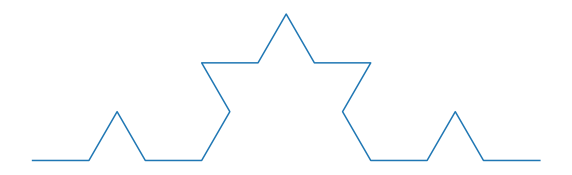

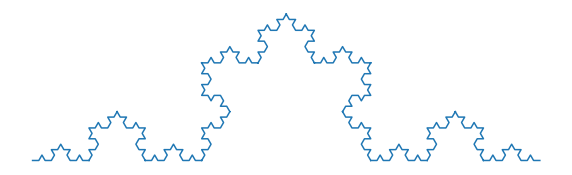

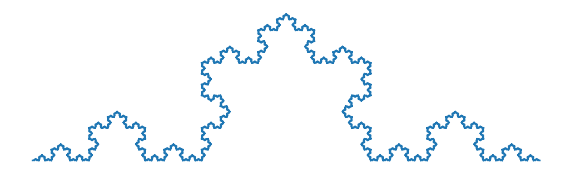

As an example, here are images that show the construction of the Koch Curve. The procedure is as follows: start with a line segment, mark the points that divide the segment into thirds, cut out the middle third, draw two new line segments that connect the two remaining segments at a point, each of length one third of the original line segment. Now do this for each no line segment. And again. And again…

One of the cool things about building a fractal this way is that the shapes are self-similar. The small bits of the curve are shaped like the large parts. Trippy!

This is because this process is, quite literally, formulaic. It is only intended to give you a sense of what I mean by coarse-to-fine: the progressive addition of finer and finer details — a process that can in principle be performed to arbitrary levels of detail.

To show how this type of “top-down” process is not at odds with self-organizing processes, but can work with them, let’s construct another example that has some interesting properties worth dwelling on for a moment.

The following figures are generated via a cellular automata models which embody a pattern forming process. In this case, the simulation approximates the process that produces Turing Patterns. Without getting into detail, essentially what happens is that each cell is influenced to be like its neighbors (e.g. if most of its close neighbors are black it will be influenced towards being black) and unlike those in the neighborhood just beyond its neighbors (e.g. if most of those cells just beyond its close neighbors are black it will be influenced towards being white).

At any moment, a cell is either white or black — wherever the balance happens to fall based on the collective influence of these two surrounding neighborhoods.

Clearly, one of the major parameters of the model is the size of the neighborhoods: how far away can a cell be and still be in the ‘nearby’ neighborhood? And the neighborhood beyond that? Those will be the parameters we vary in what follows.

We begin the simulation by setting each cell randomly to a white or black state, and then let it evolve there. We will run the model initially for five time steps and then stop. In most conditions, this model will evolve to a stable, fixed pattern, but five steps is heuristically good enough for our purposes, even if it’s not totally settled yet.

Here, as we will see, the neighborhood parameters are set to be relatively large. Specifically, the nearby radius of interaction is set to 40 cells, and the outer radius of the outer neighborhood is set to 80 cells. Let’s have a look at these initial steps.

In the first image you can see the size of the cells, the little black and white pixels. The patterns that rapidly form as a result of the internal forces resolve themselves are much coarser than is the granularity of the cells themselves. In some sense we have done the opposite of what I have been discussing, we have moves from a fine-to-coarse granularity, but we will use this pattern as a “base-layer” to iterate from.

Note: so far, the pattern is decidedly NOT a result of a blueprint — it is a direct result of a self-organizing process.

What we will do next is take one set of stripes, the black ones in this case, and run the same kind of process, only at a finger granularity, over those stripes. As a first step we will randomize the cells in those stripes to be white or black, this mitigates things being a bit too orderly, although theres nothing wrong with that, I prefer the results obtained from doing it this way. The neighborhood radius parameters will be set to 6 and 12 for the nearby and further-away neighborhoods, respectively.

Now we have patterns inside of patterns. The coarser pattern served as a constraint for a finer-grained process to produce a more detailed pattern.

There is something important worth noting here: the original black stripe did not merely serve as a mask for a finer-grained pattern — the two scales are interacting. This can be seen if you direct your attention to the boundaries of the initial coarse stripes. Notice that they are accentuated by the fine-grained patterns. The forces that the fine process are sensitive to span across the boundary, even though the construction of the pattern is constrained to be within the initial coarse black stripe. Interesting things happen at boundaries, as a general rule.

We could iterate in this same way again, this time taking the white stripes of the fine-grained patterns as our new “domain”. The radii parameters here are now 2 and 4.

Patterns inside patterns inside patterns. There is of course no limit in principle to the number of nested patterns, and they need not be the same pattern forming processes at each nested layer, or else could vary in additional parameters we didn’t discuss above.

Above we presented the idea in a spatial and geometric context, and it is indeed quite relevant there. For instance, the concept of a pattern language as developed by Chris Alexander, each pattern is tied to a specific scale, with some patterns being nested inside of (and serving to fulfill) other larger patterns.

In his book of the same name (A Pattern Language), he explicitly advocates for working in this manner: from larger to smaller patterns, where attention always being paid to how a smaller pattern is serving to bring more life to the larger patterns they are within. The large patterns are in place before we even arrive. Living architecture responds to the context that is given by nature — it does not proceed by smoothing the world in order to impose on it, as in many modern building practices.

It is also central to the field of permaculture. Permaculture is essentially a set of principles and design concepts that are intended to aid in the development of agriculturally productive land that is contextually sensitive and not only does not deplete local resources but improves them over time. Again, when designing a permaculture site, attention is first focused on the large-scale coarse patterns of the land. Where will the home sit? Where will the gardens and orchards go? Where will the boundary between tended and untended land fall? Roughly.

The details can, and must, come later. Freezing them too early will ensure plenty of “oh shit” moments. You can make these moments small, or even non-existent, by opting for easily reversible decisions up front, avoiding the costly-to-reverse ones until one has a much deeper understanding.

And this last point gets at something essential: the coarse-to-fine progression is not only essential in spatial projects, but in abstract spaces as well. It is a strategic advantage for intentions to be fuzzy early on. Having a “rough idea” beats having an “exact image” in complex settings. Eventually the details have to be worked out, but NOT as a first step — you will get them wrong.

This is in fact one of the key differences between classical “systems engineering” — which is driven by the articulation of pre-specified requirements — and complex systems engineering, which leverages evolutionary processes to generate better fit solutions to a range of problems and challenges over time. In complex systems engineering, defining coarsely the “outcome space” enables engineers and developers to use their creativity in exploring many possible ways to address existing challenges. Various forms are offered, and the ones that best fit go on to be iterated on, refined, and crystallized into very-well-fit solutions. If the outcome space were defined too precisely, it would reduce the richness in variety that is essential for evolutionary exploration.

Another context in which coarse-to-fine shows itself as absolutely essential is in the way the military takes action. The “Commander’s intent” sets a coarse view of what is to be achieved, and lower-level units develop and execute operations to fill that intent. Different kinds of assets have different degrees of autonomy in pursuing that intent; for instance Special Operations Forces operate in small teams with a large degree of autonomy so they can solves especially complex challenges in pursuit of the big picture.

The modern attitude harbors the implicit assumption that ‘more detail’ is always better. This is simply wrong. Coarseness, fuzziness, blurriness, is an asset when used properly.

In the spirit of Chris Alexander, it is no exaggeration to say that coarse-to-fine processes produce and support LIFE, while blueprint processes are DEAD.

These two “top-downs” are fundamentally different, and this difference is essential to understand when it comes to generating and responding to complexity.

I really appreciate this distinction. In my work working with software teams, I’ve encountered a recurring challenge re: ways to encourage a locally (team-scoped) autonomy around the details of an initiative from “above” - course-to-fine offers a thought-provoking guide for how to approach the superficial tension inherent in that goal. ;)

Quite provocative. A siren warning against a priori design rigidity. The self-organizing pattern design algorithms might be mind-blowing as self-generating NFTs. Just a thought